We ended the previous post with a mechanical transformation of the C++ AMP matrix multiplication example to the tiled model and in the process introduced tiled_index and tiled_extent. This is part 2.

tile_static memory

You all know that in regular CPU code, static variables have the same value regardless of which thread accesses the static variable. This is in contrast with non-static local variables, where each thread has its own copy.

Back to C++ AMP, the same rules apply and each thread has its own value for local variables in your lambda, whereas all threads see the same global memory, which is the data they have access to via the array and array_view.

In addition, on an accelerator like the GPU, there is a programmable cache, a third kind of memory type if you'd like to think of it that way (some call it shared memory, others call it scratchpad memory). Variables stored in that memory share the same value for every thread in the same tile. So, when you use the tiled model, you can have variables where each thread in the same tile sees the same value for that variable, that threads from other tiles do not. The new storage class for local variables introduced for this purpose is called tile_static. You can only use tile_static in restrict(amp) functions, and only when explicitly using the tiled model. What this looks like in code should be no surprise, but here is a snippet to confirm your mental image, using a good old regular C array

// each tile of threads has its own copy of locA,

// shared among the threads of the tile

tile_static float locA[16][16];

Note that tile_static variables are scoped and have the lifetime of the tile, and they cannot have constructors or destructors.

tile_barrier

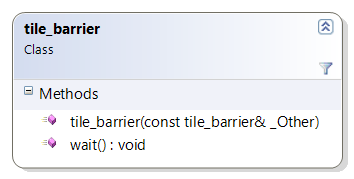

In amp.h one of the types introduced is tile_barrier.  You cannot construct this object yourself (although if you had one, you could use a copy constructor to create another one). So how do you get one of these? You get it, from a tiled_index object. Beyond the 4 properties returning index objects, tiled_index has another property, barrier, that returns a tile_barrier object. The tile_barrier class exposes the method wait (and other wait overloads).

You cannot construct this object yourself (although if you had one, you could use a copy constructor to create another one). So how do you get one of these? You get it, from a tiled_index object. Beyond the 4 properties returning index objects, tiled_index has another property, barrier, that returns a tile_barrier object. The tile_barrier class exposes the method wait (and other wait overloads).

15: // Given a tiled_index object named t_idx

16: t_idx.barrier.wait();

17: // more code

…in the code above, all threads in the tile will reach line 16 before a single one progresses to line 17. Note that all threads must be able to reach the barrier, i.e. if you had branchy code in such a way which meant that there is a chance that not all threads could reach line 16, then the code above would be illegal.

Tiled Matrix Multiplication Example – part 2

So now that we added to our understanding the concepts of tile_static and tile_barrier, let me obfuscate rewrite the matrix multiplication code so that it takes advantage of tile_static memory. Before you start reading this, I suggest you get a cup of your favorite non-alcoholic beverage to enjoy while you try to fully understand the code.

01: void MatrixMultiplyTiled(vector<float>& vC,

const vector<float>& vA,

const vector<float>& vB, int M, int N, int W)

02: {

03: static const int TS = 16;

04: array_view<const float,2> a(M, W, vA);

05: array_view<const float,2> b(W, N, vB);

06: array_view<float,2> c(M,N,vC); c.discard_data();

07: parallel_for_each(c.extent.tile< TS, TS >(),

08: [=] (tiled_index< TS, TS> t_idx) restrict(amp)

09: {

10: int row = t_idx.local[0]; int col = t_idx.local[1];

11: float sum = 0.0f;

12: for (int i = 0; i < W; i += TS) {

13: tile_static float locA[TS][TS], locB[TS][TS];

14: locA[row][col] = a(t_idx.global[0], col + i);

15: locB[row][col] = b(row + i, t_idx.global[1]);

16: t_idx.barrier.wait();

17: for (int k = 0; k < TS; k++)

18: sum += locA[row][k] * locB[k][col];

19: t_idx.barrier.wait();

20: }

21: c[t_idx.global] = sum;

22: });

23: }

Notice that all the code up to line 9 is the same as per the changes we made in part 1 of tiling introduction. If you squint, the body of the lambda itself preserves the original algorithm on lines 10, 11, and 17, 18, and 21. The difference being that those lines use new indexing and the tile_static arrays; the tile_static arrays are declared and initialized on the brand new lines 13-15. On those lines we copy from the global memory represented by the array_view objects (a and b), to the tile_static vanilla arrays (locA and locB) – we are copying enough to fit a tile. Because in the code that follows on line 18 we expect the data for this tile to be in the tile_static storage, we need to synchronize the threads within each tile with a barrier, which we do on line 16 (to avoid accessing uninitialized memory on line 18). We also need to synchronize the threads within a tile on line 19, again to avoid the race between lines 14, 15 (retrieving the next set of data for each tile and overwriting the previous set) and line 18 (not being done processing the previous set of data). Luckily, as part of the awesome C++ AMP debugger in Visual Studio there is an option that helps you find such races, but that is a story for another blog post another time.

May I suggest reading the next section, and then coming back to re-read and walk through this code with pen and paper to really grok what is going on, if you haven't already? Cool.

Why would I introduce this tiling complexity into my code?

Funny you should ask that, I was just about to tell you. There is only one reason we tiled our extent, had to deal with finding a good tile size, ensure the number of threads we schedule are correctly divisible with the tile size, had to use a tiled_index instead of a normal index, and had to understand tile_barrier and to figure out where we need to use it, and double the size of our lambda in terms of lines of code: the reason is to be able to use tile_static memory.

Why do we want to use tile_static memory? Because accessing tile_static memory is around 10 times faster than accessing the global memory on an accelerator like the GPU, e.g. in the code above, if you can get 150GB/second accessing data from the array_view a, you can get 1500GB/second accessing the tile_static array locA. And since by definition you are dealing with really large data sets, the savings really pay off. We have seen tiled implementations being twice as fast as their non-tiled counterparts.

Now, some algorithms will not have performance benefits from tiling (and in fact may deteriorate), e.g. algorithms that require you to go only once to global memory may not benefit from tiling, since with tiling you already have to fetch the data once from global memory! Other algorithms may benefit, but you may decide that you are happy with your code being 50 times faster than the serial-version you had, and you do not need to invest to make it 100 times faster. Also algorithms with more than 3 dimensions, which C++ AMP supports in the simple model, cannot be tiled.

Also note that in future releases, we may invest in making the non-tiled model, which already uses tiling under the covers, go the extra step and use tile_static memory on your behalf, but it is obviously way to early to commit to anything like that, and we certainly don't do any of that today.