Sat, September 3, 2011, 08:32 PM under

GPGPU |

ParallelComputing

Overview

We saw previously that accelerator represents a target for our C++ AMP computation or memory allocation and that there is a notion of a default accelerator. We ended that post by introducing how one can obtain accelerator_view objects from an accelerator object through the accelerator class's default_view property and the create_view method.

The accelerator_view objects can be thought of as handles to an accelerator.

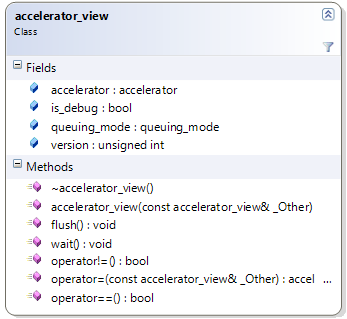

You can also construct an accelerator_view given another accelerator_view (through the copy constructor or the assignment operator overload). Speaking of operator overloading, you can also compare (for equality and inequality) two accelerator_view objects between them to determine if they refer to the same underlying accelerator.

We'll see later that when we use concurrency::array objects, the allocation of data takes place on an accelerator at array construction time, so there is a constructor overload that accepts an accelerator_view object. We'll also see later that a new concurrency::parallel_for_each function overload can take an accelerator_view object, so it knows on what target to execute the computation (represented by a lambda that the parallel_for_each also accepts).

Beyond normal usage, accelerator_view is a quality of service concept that offers isolation to multiple "consumers" of an accelerator. If in your code you are accessing the accelerator from multiple threads (or, in general, from different parts of your app), then you'll want to create separate accelerator_view objects for each thread.

flush, wait, and queuing_mode

When you create an accelerator_view via the create_view method of the accelerator, you pass in an option of queuing_mode_immediate or queuing_mode_automatic, which are the two members of the queuing_mode enum. At any point you can access this value from the queuing_mode property of the accelerator_view.

When the queuing_mode value is queuing_mode_automatic (which is the default), any commands sent to the device such as kernel invocations and data transfers (e.g. parallel_for_each and copy, as we'll see in future posts), will get submitted as soon as the runtime sees fit (that is the definition of immediate).

When the value of queuing_mode is queuing_mode_immediate, the commands will be submitted/flushed immediately.

To send all buffered commands to the device for execution, there is a non-blocking flush method that you can call. If you wish to block until all the commands have been sent, there is a wait method you can call (which also flushes). You can read more to understand C++ AMP's queuing_mode.

Querying information

Just like accelerator, accelerator_view exposes the is_debug and version properties. In fact, you can always access the accelerator object from the accelerator property on the accelerator_view class to access the accelerator interface we looked at previously.

Accelerator also exposes a function that helps you stay aware of the progress of execution. You can read more about accelerator_view::create_marker.

Interop with D3D (aka DX)

If your app that uses C++ AMP to compute data also uses DirectX rendering shaders, e.g. pixel shaders, you can benefit by integrating C++ AMP into your graphics pipeline. One of the building blocks for that is being able to use the same device context from both the compute kernel and the other shaders. You can do that by going from accelerator_view to device context (and vice versa), through part of our interop API in amp.h: *get_device, create_accelerator_view. You can read more on DirectX interop.